Toxic Language Classifier

A lightweight model for Detecting Toxic Language.

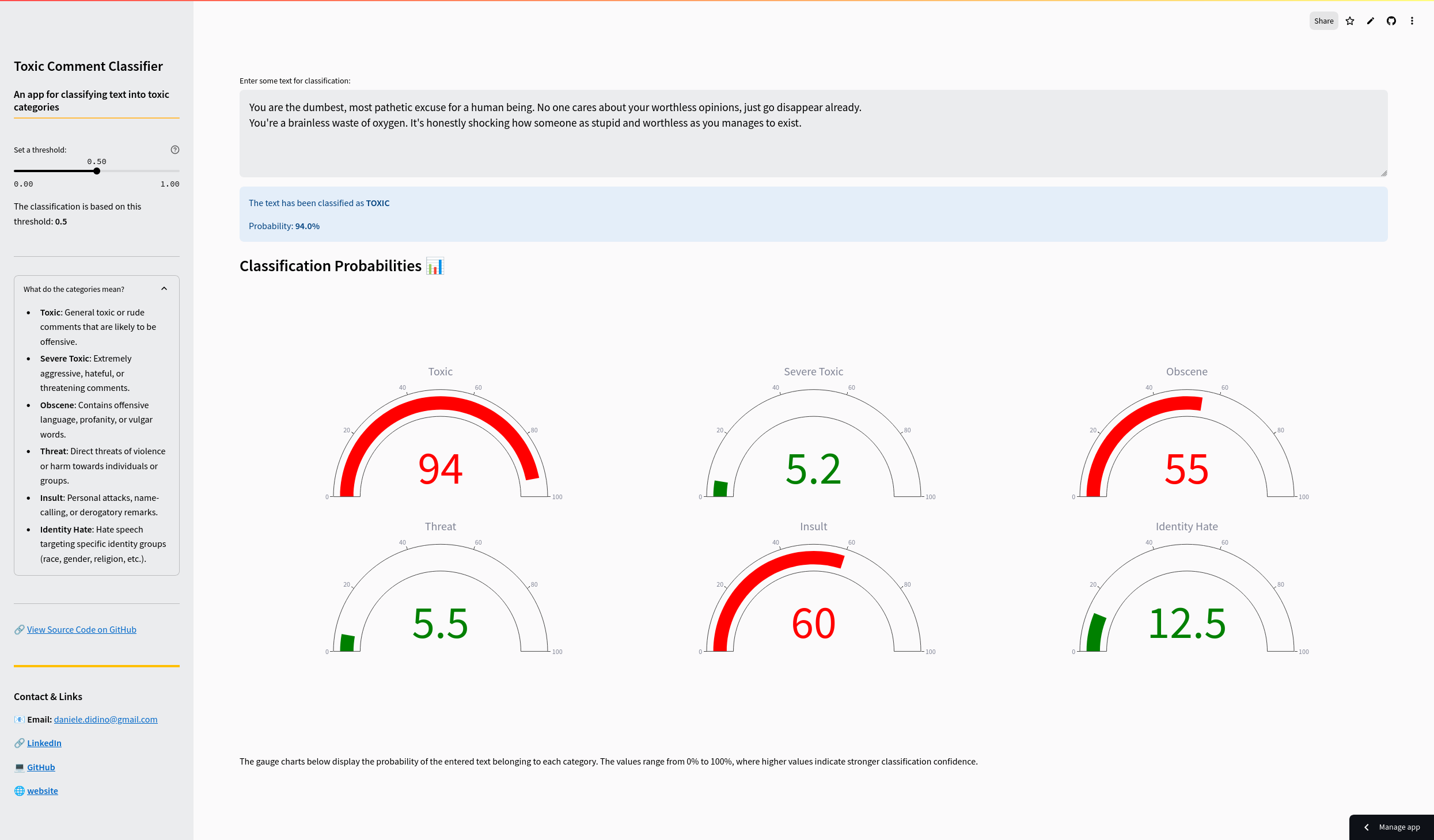

In this project, I set out to build an efficient, lightweight model that can accurately classify toxic content using minimal computational resources. The model performs multilabel classification, meaning it can detect and assign multiple types of toxicity — like insults, threats, or hate speech — to a single piece of text. Given the compact nature of the dataset (around 100,000 short text samples), I chose a hybrid CNN+GRU architecture. This approach combines the strengths of convolutional layers for pattern detection with recurrent layers for capturing contextual flow, striking a solid balance between performance and efficiency. The final model is integrated into a Streamlit web app, making it easy to test and explore in real time.

Dailogy App

A System for Detecting and Improving Dysfunctional Language.

This project is dedicated to developing an app designed to transform dysfunctional and toxic language into more respectful and inclusive communication. Our current focus is on interactions between couples or ex-couples who need to communicate regularly. By creating tools to detect and mitigate harmful language, we aim to foster more respectful and inclusive conversations.

Chatbot - Agentur für Arbeit

Chat with the documents from the Agentur für Arbeit.

This chatbot utilizes publicly available PDF documents from the Agentur für Arbeit as its primary data source. It responds to queries by leveraging these documents within a RAG (Retrieval-Augmented Generation) pipeline framework. The chatbot not only provides answers but also includes source details, such as the title and page number of the referenced documents.